Artificial Intelligence Engineer Nanodegree - Probabilistic Models¶

Project: Sign Language Recognition System¶

Introduction¶

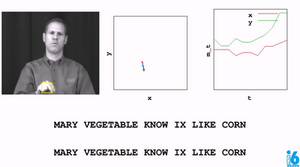

The overall goal of this project is to build a word recognizer for American Sign Language video sequences, demonstrating the power of probabalistic models. In particular, this project employs hidden Markov models (HMM's) to analyze a series of measurements taken from videos of American Sign Language (ASL) collected for research (see the RWTH-BOSTON-104 Database). In this video, the right-hand x and y locations are plotted as the speaker signs the sentence.

The raw data, train, and test sets are pre-defined. You will derive a variety of feature sets (explored in Part 1), as well as implement three different model selection criterion to determine the optimal number of hidden states for each word model (explored in Part 2). Finally, in Part 3 you will implement the recognizer and compare the effects the different combinations of feature sets and model selection criteria.

At the end of each Part, complete the submission cells with implementations, answer all questions, and pass the unit tests. Then submit the completed notebook for review!

PART 1: Data¶

Features Tutorial¶

Load the initial database¶

A data handler designed for this database is provided in the student codebase as the AslDb class in the asl_data module. This handler creates the initial pandas dataframe from the corpus of data included in the data directory as well as dictionaries suitable for extracting data in a format friendly to the hmmlearn library. We'll use those to create models in Part 2.

To start, let's set up the initial database and select an example set of features for the training set. At the end of Part 1, you will create additional feature sets for experimentation.

import numpy as np

import pandas as pd

from asl_data import AslDb

asl = AslDb() # initializes the database

asl.df.head() # displays the first five rows of the asl database, indexed by video and frame

asl.df.ix[98,1] # look at the data available for an individual frame

The frame represented by video 98, frame 1 is shown here:

Feature selection for training the model¶

The objective of feature selection when training a model is to choose the most relevant variables while keeping the model as simple as possible, thus reducing training time. We can use the raw features already provided or derive our own and add columns to the pandas dataframe asl.df for selection. As an example, in the next cell a feature named 'grnd-ry' is added. This feature is the difference between the right-hand y value and the nose y value, which serves as the "ground" right y value.

from asl_utils import test_features_tryit

asl.df['grnd-ry'] = asl.df['right-y'] - asl.df['nose-y']

asl.df['grnd-rx'] = asl.df['right-x'] - asl.df['nose-x']

asl.df['grnd-ly'] = asl.df['left-y'] - asl.df['nose-y']

asl.df['grnd-lx'] = asl.df['left-x'] - asl.df['nose-x']

# test the code

test_features_tryit(asl)

# collect the features into a list

features_ground = ['grnd-rx','grnd-ry','grnd-lx','grnd-ly']

#show a single set of features for a given (video, frame) tuple

[asl.df.ix[98,1][v] for v in features_ground]

Build the training set¶

Now that we have a feature list defined, we can pass that list to the build_training method to collect the features for all the words in the training set. Each word in the training set has multiple examples from various videos. Below we can see the unique words that have been loaded into the training set:

training = asl.build_training(features_ground)

print("Training words: {}".format(training.words))

The training data in training is an object of class WordsData defined in the asl_data module. in addition to the words list, data can be accessed with the get_all_sequences, get_all_Xlengths, get_word_sequences, and get_word_Xlengths methods. We need the get_word_Xlengths method to train multiple sequences with the hmmlearn library. In the following example, notice that there are two lists; the first is a concatenation of all the sequences(the X portion) and the second is a list of the sequence lengths(the Lengths portion).

#training.get_word_Xlengths('CHOCOLATE')

More feature sets¶

So far we have a simple feature set that is enough to get started modeling. However, we might get better results if we manipulate the raw values a bit more, so we will go ahead and set up some other options now for experimentation later. For example, we could normalize each speaker's range of motion with grouped statistics using Pandas stats functions and pandas groupby. Below is an example for finding the means of all speaker subgroups.

Try it!¶

from asl_utils import test_std_tryit

# TODO Create a dataframe named `df_std` with standard deviations grouped by speaker

df_std = asl.df.groupby('speaker').std()

df_std

asl.df['left-x-std']= asl.df['speaker'].map(df_std['left-x'])

asl.df['grnd-lx-std']= asl.df['speaker'].map(df_std['grnd-lx'])

asl.df['left-y-std']= asl.df['speaker'].map(df_std['left-y'])

asl.df['grnd-ly-std']= asl.df['speaker'].map(df_std['grnd-ly'])

asl.df['right-x-std']= asl.df['speaker'].map(df_std['right-x'])

asl.df['grnd-rx-std']= asl.df['speaker'].map(df_std['grnd-rx'])

asl.df['right-y-std']= asl.df['speaker'].map(df_std['right-y'])

asl.df['grnd-ry-std']= asl.df['speaker'].map(df_std['grnd-ry'])

df_means = asl.df.groupby('speaker').mean()

df_means

asl.df['left-x-mean']= asl.df['speaker'].map(df_means['left-x'])

asl.df['grnd-lx-mean']= asl.df['speaker'].map(df_means['grnd-lx'])

asl.df['left-y-mean']= asl.df['speaker'].map(df_means['left-y'])

asl.df['grnd-ly-mean']= asl.df['speaker'].map(df_means['grnd-ly'])

asl.df['right-x-mean']= asl.df['speaker'].map(df_means['right-x'])

asl.df['grnd-rx-mean']= asl.df['speaker'].map(df_means['grnd-rx'])

asl.df['right-y-mean']= asl.df['speaker'].map(df_means['right-y'])

asl.df['grnd-ry-mean']= asl.df['speaker'].map(df_means['grnd-ry'])

asl.df.head()

# test the code

#test_std_tryit(df_std)

Features Implementation Submission¶

Implement four feature sets and answer the question that follows.

normalized Cartesian coordinates

- use mean and standard deviation statistics and the standard score equation to account for speakers with different heights and arm length

polar coordinates

- calculate polar coordinates with Cartesian to polar equations

- use the np.arctan2 function and swap the x and y axes to move the $0$ to $2\pi$ discontinuity to 12 o'clock instead of 3 o'clock; in other words, the normal break in radians value from $0$ to $2\pi$ occurs directly to the left of the speaker's nose, which may be in the signing area and interfere with results. By swapping the x and y axes, that discontinuity move to directly above the speaker's head, an area not generally used in signing.

delta difference

- as described in Thad's lecture, use the difference in values between one frame and the next frames as features

- pandas diff method and fillna method will be helpful for this one

custom features

- These are your own design; combine techniques used above or come up with something else entirely. We look forward to seeing what you come up with!

Some ideas to get you started:

- normalize using a feature scaling equation

- normalize the polar coordinates

- adding additional deltas

- These are your own design; combine techniques used above or come up with something else entirely. We look forward to seeing what you come up with!

Some ideas to get you started:

# TODO add features for normalized by speaker values of left, right, x, y

# Name these 'norm-rx', 'norm-ry', 'norm-lx', and 'norm-ly'

# using Z-score scaling (X-Xmean)/Xstd

import numpy as np

features_norm = ['norm-rx', 'norm-ry', 'norm-lx','norm-ly']

asl.df['norm-lx'] = ((asl.df['left-x'] - asl.df['left-x-mean'] ) / asl.df['left-x-std'])

asl.df['norm-ly'] = ((asl.df['left-y'] - asl.df['left-y-mean'] ) / asl.df['left-y-std'])

asl.df['norm-rx'] = ((asl.df['right-x'] - asl.df['right-x-mean'] ) / asl.df['right-x-std'])

asl.df['norm-ry'] = ((asl.df['right-y'] - asl.df['right-y-mean'] ) / asl.df['right-y-std'])

asl.df.columns

# TODO add features for polar coordinate values where the nose is the origin

# Name these 'polar-rr', 'polar-rtheta', 'polar-lr', and 'polar-ltheta'

# Note that 'polar-rr' and 'polar-rtheta' refer to the radius and angle

features_polar = ['polar-rr', 'polar-rtheta', 'polar-lr', 'polar-ltheta']

def cart2pol(x, y):

rho = np.sqrt(x**2 + y**2)

phi = np.arctan2(x,y)

return(rho, phi)

def pol2cart(rho, phi):

x = rho * np.cos(phi)

y = rho * np.sin(phi)

return(x, y)

features_polar = ['polar-rr', 'polar-rtheta', 'polar-lr', 'polar-ltheta']

(r,p) = cart2pol(asl.df['grnd-rx'],asl.df['grnd-ry'])

asl.df['polar-rr'] = r

asl.df['polar-rtheta'] = p

(r,p) = cart2pol(asl.df['grnd-lx'],asl.df['grnd-ly'])

asl.df['polar-lr'] = r

asl.df['polar-ltheta'] = p

df_std = asl.df.groupby('speaker').std()

df_mean = asl.df.groupby('speaker').mean()

asl.df['polar-rr-std']= asl.df['speaker'].map(df_std['polar-rr'])

asl.df['polar-lr-std']= asl.df['speaker'].map(df_std['polar-lr'])

asl.df['polar-ltheta-std']= asl.df['speaker'].map(df_std['polar-ltheta'])

asl.df['polar-rtheta-std']= asl.df['speaker'].map(df_std['polar-rtheta'])

asl.df['polar-rr-mean']= asl.df['speaker'].map(df_mean['polar-rr'])

asl.df['polar-lr-mean']= asl.df['speaker'].map(df_mean['polar-lr'])

asl.df['polar-ltheta-mean']= asl.df['speaker'].map(df_mean['polar-ltheta'])

asl.df['polar-rtheta-mean']= asl.df['speaker'].map(df_mean['polar-rtheta'])

asl.df['norm-polar-rr'] = ((asl.df['polar-rr'] - asl.df['polar-rr-mean'] ) / asl.df['polar-rr-std'])

asl.df['norm-polar-lr'] = ((asl.df['polar-lr'] - asl.df['polar-lr-mean'] ) / asl.df['polar-lr-std'])

asl.df['norm-polar-ltheta'] = ((asl.df['polar-ltheta'] - asl.df['polar-ltheta-mean'] ) / asl.df['polar-ltheta-std'])

asl.df['norm-polar-rtheta'] = ((asl.df['polar-rtheta'] - asl.df['polar-rtheta-mean'] ) / asl.df['polar-rtheta-std'])

features_custom = ['norm-polar-lr','norm-polar-rr','norm-polar-ltheta','norm-polar-rtheta']

# TODO add features for left, right, x, y differences by one time step, i.e. the "delta" values discussed in the lecture

# Name these 'delta-rx', 'delta-ry', 'delta-lx', and 'delta-ly'

asl.df['delta-rx'] = asl.df['right-x'].diff(periods=1)

asl.df['delta-ry'] = asl.df['right-y'].diff(periods=1)

asl.df['delta-lx'] = asl.df['left-x'].diff(periods=1)

asl.df['delta-ly'] = asl.df['left-y'].diff(periods=1)

features_delta = ['delta-rx', 'delta-ry', 'delta-lx', 'delta-ly']

sample = (asl.df.ix[98, 18][features_delta]).tolist()

#sample

asl.df.fillna(0, inplace=True)

#asl.df

# TODO add features of your own design, which may be a combination of the above or something else

# Name these whatever you would like

# TODO define a list named 'features_custom' for building the training set

import sklearn

features_ground_normal = ['grnd-rx-norm','grnd-ry-norm', 'grnd-lx-norm', 'grnd-ly-norm']

asl.df['grnd-rx-norm'] = ((asl.df['grnd-rx'] - asl.df['grnd-rx-mean'] ) / asl.df['grnd-rx-std'])

asl.df['grnd-ry-norm'] = ((asl.df['grnd-ry'] - asl.df['grnd-ry-mean'] ) / asl.df['grnd-ry-std'])

asl.df['grnd-lx-norm'] = ((asl.df['grnd-lx'] - asl.df['grnd-lx-mean'] ) / asl.df['grnd-lx-std'])

asl.df['grnd-ly-norm'] = ((asl.df['grnd-ly'] - asl.df['grnd-ly-mean'] ) / asl.df['grnd-ly-std'])

asl.df.head()

#features_ground_normal = sklearn.preprocessing.normalize(features_ground)

Question 1: What custom features did you choose for the features_custom set and why?

Answer 1: For the custom features I chose to use ground variables, which is the nose minus the left or right variable. This effectively makes the 'nose' the zero position, and changes are detected as difference from the ground, creating an anchor point for calculations. For the custom features I chose to normalized the ground variables.

Features Unit Testing¶

Run the following unit tests as a sanity check on the defined "ground", "norm", "polar", and 'delta" feature sets. The test simply looks for some valid values but is not exhaustive. However, the project should not be submitted if these tests don't pass.

import unittest

pd.set_option('display.float_format', lambda x: '%.3f' % x)

# import numpy as np

class TestFeatures(unittest.TestCase):

def test_features_ground(self):

sample = (asl.df.ix[98, 1][features_ground]).tolist()

self.assertEqual(sample, [9, 113, -12, 119])

def test_features_norm(self):

sample = (asl.df.ix[98, 1][features_norm]).tolist()

np.testing.assert_almost_equal(sample, [ 1.153, 1.663, -0.891, 0.742], 3)

def test_features_polar(self):

sample = (asl.df.ix[98,1][features_polar]).tolist()

np.testing.assert_almost_equal(sample, [113.3578, 0.0794, 119.603, -0.1005], 3)

def test_features_delta(self):

sample = (asl.df.ix[98, 0][features_delta]).tolist()

self.assertEqual(sample, [0, 0, 0, 0])

sample = (asl.df.ix[98, 18][features_delta]).tolist()

self.assertTrue(sample in [[-16, -5, -2, 4], [-14, -9, 0, 0]], "Sample value found was {}".format(sample))

suite = unittest.TestLoader().loadTestsFromModule(TestFeatures())

unittest.TextTestRunner().run(suite)

PART 2: Model Selection¶

Model Selection Tutorial¶

The objective of Model Selection is to tune the number of states for each word HMM prior to testing on unseen data. In this section you will explore three methods:

- Log likelihood using cross-validation folds (CV)

- Bayesian Information Criterion (BIC)

- Discriminative Information Criterion (DIC)

Train a single word¶

Now that we have built a training set with sequence data, we can "train" models for each word. As a simple starting example, we train a single word using Gaussian hidden Markov models (HMM). By using the fit method during training, the Baum-Welch Expectation-Maximization (EM) algorithm is invoked iteratively to find the best estimate for the model for the number of hidden states specified from a group of sample seequences. For this example, we assume the correct number of hidden states is 3, but that is just a guess. How do we know what the "best" number of states for training is? We will need to find some model selection technique to choose the best parameter.

import warnings

from hmmlearn.hmm import GaussianHMM

def train_a_word(word, num_hidden_states, features):

warnings.filterwarnings("ignore", category=DeprecationWarning)

training = asl.build_training(features)

X, lengths = training.get_word_Xlengths(word)

model = GaussianHMM(n_components=num_hidden_states, n_iter=1000).fit(X, lengths)

logL = model.score(X, lengths)

return model, logL

demoword = 'BOOK'

model, logL = train_a_word(demoword, 4, features_ground)

print("Number of states trained in model for {} is {}".format(demoword, model.n_components))

print("logL = {}".format(logL))

The HMM model has been trained and information can be pulled from the model, including means and variances for each feature and hidden state. The log likelihood for any individual sample or group of samples can also be calculated with the score method.

def show_model_stats(word, model):

print("Number of states trained in model for {} is {}".format(word, model.n_components))

variance=np.array([np.diag(model.covars_[i]) for i in range(model.n_components)])

for i in range(model.n_components): # for each hidden state

print("hidden state #{}".format(i))

print("mean = ", model.means_[i])

print("variance = ", variance[i])

print()

show_model_stats(demoword, model)

Try it!¶

Experiment by changing the feature set, word, and/or num_hidden_states values in the next cell to see changes in values.

my_testword = 'CHOCOLATE'

model, logL = train_a_word(my_testword, 4, features_delta) # Experiment here with different parameters

show_model_stats(my_testword, model)

print("logL = {}".format(logL))

Visualize the hidden states¶

We can plot the means and variances for each state and feature. Try varying the number of states trained for the HMM model and examine the variances. Are there some models that are "better" than others? How can you tell? We would like to hear what you think in the classroom online.

%matplotlib inline

ModelSelector class¶

Review the SelectorModel class from the codebase found in the my_model_selectors.py module. It is designed to be a strategy pattern for choosing different model selectors. For the project submission in this section, subclass SelectorModel to implement the following model selectors. In other words, you will write your own classes/functions in the my_model_selectors.py module and run them from this notebook:

SelectorCV: Log likelihood with CVSelectorBIC: BICSelectorDIC: DIC

You will train each word in the training set with a range of values for the number of hidden states, and then score these alternatives with the model selector, choosing the "best" according to each strategy. The simple case of training with a constant value for n_components can be called using the provided SelectorConstant subclass as follow:

from my_model_selectors import SelectorConstant

training = asl.build_training(features_ground) # Experiment here with different feature sets defined in part 1

word = 'VEGETABLE' # Experiment here with different words

model = SelectorConstant(training.get_all_sequences(), training.get_all_Xlengths(), word, n_constant=3).select()

print("Number of states trained in model for {} is {}".format(word, model.n_components))

Cross-validation folds¶

If we simply score the model with the Log Likelihood calculated from the feature sequences it has been trained on, we should expect that more complex models will have higher likelihoods. However, that doesn't tell us which would have a better likelihood score on unseen data. The model will likely be overfit as complexity is added. To estimate which topology model is better using only the training data, we can compare scores using cross-validation. One technique for cross-validation is to break the training set into "folds" and rotate which fold is left out of training. The "left out" fold scored. This gives us a proxy method of finding the best model to use on "unseen data". In the following example, a set of word sequences is broken into three folds using the scikit-learn Kfold class object. When you implement SelectorCV, you will use this technique.

from sklearn.model_selection import KFold

training = asl.build_training(features_ground) # Experiment here with different feature sets

word = 'VEGETABLE' # Experiment here with different words

word_sequences = training.get_word_sequences(word)

split_method = KFold()

for cv_train_idx, cv_test_idx in split_method.split(word_sequences):

print("Train fold indices:{} Test fold indices:{}".format(cv_train_idx, cv_test_idx)) # view indices of the folds

Tip: In order to run hmmlearn training using the X,lengths tuples on the new folds, subsets must be combined based on the indices given for the folds. A helper utility has been provided in the asl_utils module named combine_sequences for this purpose.

Scoring models with other criterion¶

Scoring model topologies with BIC balances fit and complexity within the training set for each word. In the BIC equation, a penalty term penalizes complexity to avoid overfitting, so that it is not necessary to also use cross-validation in the selection process. There are a number of references on the internet for this criterion. These slides include a formula you may find helpful for your implementation.

The advantages of scoring model topologies with DIC over BIC are presented by Alain Biem in this reference (also found here). DIC scores the discriminant ability of a training set for one word against competing words. Instead of a penalty term for complexity, it provides a penalty if model liklihoods for non-matching words are too similar to model likelihoods for the correct word in the word set.

Model Selection Implementation Submission¶

Implement SelectorCV, SelectorBIC, and SelectorDIC classes in the my_model_selectors.py module. Run the selectors on the following five words. Then answer the questions about your results.

Tip: The hmmlearn library may not be able to train or score all models. Implement try/except contructs as necessary to eliminate non-viable models from consideration.

from my_model_selectors import SelectorCV

import asl_utils

words_to_train = ['FISH', 'BOOK', 'VEGETABLE', 'FUTURE', 'JOHN']

import timeit

training = asl.build_training(features_ground) # Experiment here with different feature sets defined in part 1

sequences = training.get_all_sequences()

Xlengths = training.get_all_Xlengths()

for word in words_to_train:

start = timeit.default_timer()

model = SelectorCV(sequences, Xlengths, word,

min_n_components=2, max_n_components=15, random_state = 14).select()

end = timeit.default_timer()-start

if model is not None:

#print(model)

print("Training complete for {} with {} states with time {} seconds".format(word, model.n_components, end))

else:

print("Training failed for {}".format(word))

#%load_ext autoreload

#%autoreload 2

# TODO: Implement SelectorBIC in module my_model_selectors.py

from my_model_selectors import SelectorBIC

training = asl.build_training(features_ground) # Experiment here with different feature sets defined in part 1

sequences = training.get_all_sequences()

Xlengths = training.get_all_Xlengths()

for word in words_to_train:

start = timeit.default_timer()

model = SelectorBIC(sequences, Xlengths, word,

min_n_components=2, max_n_components=14, random_state = 14).select()

end = timeit.default_timer()-start

if model is not None:

print("Training complete for {} with {} states with time {} seconds".format(word, model.n_components, end))

else:

print("Training failed for {}".format(word))

#%load_ext autoreload

#%autoreload 2

# TODO: Implement SelectorDIC in module my_model_selectors.py

from my_model_selectors import SelectorDIC

training = asl.build_training(features_polar) # Experiment here with different feature sets defined in part 1

sequences = training.get_all_sequences()

Xlengths = training.get_all_Xlengths()

for word in words_to_train:

start = timeit.default_timer()

model = SelectorDIC(sequences, Xlengths, word,

min_n_components=2, max_n_components=15, random_state = 14).select()

end = timeit.default_timer()-start

if model is not None:

print("Training complete for {} with {} states with time {} seconds".format(word, model.n_components, end))

else:

print("Training failed for {}".format(word))

Question 2: Compare and contrast the possible advantages and disadvantages of the various model selectors implemented.

Answer 2: In this section we will talk about our 3 model selectors and how they perform. Our first method, SelectorCV, uses cross-validation using K-folds, with the number of folds being 2 or 3 depending on the length. This process will train on a subset of the data, and then test against data it has not seen before. In this way we can test how our model performs against new data, to combat overfitting. This is the validation part of Cross Validation, we validate our models performance on data it hasn't seen before. Drawbacks of this method is it requires multiple sets of training data. Some of the examples only had 1 example to train on, so splitting the data into test and train was impossible.

Our second model selection technique uses Bayesian Information Criterion or BIC. Our BIC selector works by finding the log-likelihood for a given model, multiplying our log-likelihood score L by -2 , making it a minimization problem ( we are now trying to minimize this score ), and adding a penalty parameter to it that increases with model complexity. So we take our initial score, multiply by -2 to flip it, then add a penalty based on complexity. The result is , all things remaining equal, the selector will choose the least complex model. BIC suffers from features with high dimensionality. Because of the penalty added for free parameters, it will want to converge on to a simple solution , regardless of the complexity of the problem. Other heuristics such as AIC do not suffer from this problem.

Our third and final selector is the Discriminative Information Criterion or DIC. In this technique, the score is obtained by first calculating the likelihood of our known word with our model, and then subtracting the average likelihood of all other words. The end result is a selection process that favors models that have high scores for specific words, and a low probability of being all the other words. Because of this , DIC works well with more states than fewer. For each word, we have anti-evidence of competing models that better our classification task by selecting a model that scores poorly on other predictions, and well on the target prediction.

Model Selector Unit Testing¶

Run the following unit tests as a sanity check on the implemented model selectors. The test simply looks for valid interfaces but is not exhaustive. However, the project should not be submitted if these tests don't pass.

from asl_test_model_selectors import TestSelectors

suite = unittest.TestLoader().loadTestsFromModule(TestSelectors())

unittest.TextTestRunner().run(suite)

PART 3: Recognizer¶

The objective of this section is to "put it all together". Using the four feature sets created and the three model selectors, you will experiment with the models and present your results. Instead of training only five specific words as in the previous section, train the entire set with a feature set and model selector strategy.

Recognizer Tutorial¶

Train the full training set¶

The following example trains the entire set with the example features_ground and SelectorConstant features and model selector. Use this pattern for you experimentation and final submission cells.

# autoreload for automatically reloading changes made in my_model_selectors and my_recognizer

#%load_ext autoreload

#%autoreload 2

import my_model_selectors

def train_all_words(features, model_selector):

training = asl.build_training(features) # Experiment here with different feature sets defined in part 1

sequences = training.get_all_sequences()

Xlengths = training.get_all_Xlengths()

model_dict = {}

for word in training.words:

model = model_selector(sequences, Xlengths, word,

n_constant=3).select()

model_dict[word]=model

return model_dict

models = train_all_words(features_polar, SelectorBIC)

print("Number of word models returned = {}".format(len(models)))

Load the test set¶

The build_test method in ASLdb is similar to the build_training method already presented, but there are a few differences:

- the object is type

SinglesData - the internal dictionary keys are the index of the test word rather than the word itself

- the getter methods are

get_all_sequences,get_all_Xlengths,get_item_sequencesandget_item_Xlengths

test_set = asl.build_test(features_polar)

print("Number of test set items: {}".format(test_set.num_items))

print("Number of test set sentences: {}".format(len(test_set.sentences_index)))

Recognizer Implementation Submission¶

For the final project submission, students must implement a recognizer following guidance in the my_recognizer.py module. Experiment with the four feature sets and the three model selection methods (that's 12 possible combinations). You can add and remove cells for experimentation or run the recognizers locally in some other way during your experiments, but retain the results for your discussion. For submission, you will provide code cells of only three interesting combinations for your discussion (see questions below). At least one of these should produce a word error rate of less than 60%, i.e. WER < 0.60 .

Tip: The hmmlearn library may not be able to train or score all models. Implement try/except contructs as necessary to eliminate non-viable models from consideration.

from my_recognizer import recognize

features = features_ground_normal # change as needed

model_selector = SelectorDIC # change as needed

# TODO Recognize the test set and display the result with the show_errors method

models = train_all_words(features, model_selector)

test_set = asl.build_test(features)

probabilities, guesses = recognize(models, test_set)

show_errors(guesses, test_set)

from my_recognizer import recognize

features = features_polar # change as needed

model_selector = SelectorBIC # change as needed

# TODO Recognize the test set and display the result with the show_errors method

models = train_all_words(features, model_selector)

test_set = asl.build_test(features)

probabilities, guesses = recognize(models, test_set)

show_errors(guesses, test_set)

from my_recognizer import recognize

features = features_polar # change as needed

model_selector = SelectorCV # change as needed

# TODO Recognize the test set and display the result with the show_errors method

models = train_all_words(features, model_selector)

test_set = asl.build_test(features)

probabilities, guesses = recognize(models, test_set)

show_errors(guesses, test_set)

# Results for below

from collections import defaultdict

results = defaultdict(dict)

results['DIC']['GROUND_NORMAL'] = """0.5274157303370787"""

results['DIC']['NORM'] = """0.6460674157303371"""

results['DIC']['POLAR'] = """0.5680898876404494"""

results['DIC']['DELTA'] = """0.6460674157303371"""

results["CV"]["NORM"] = """0.6235955056179775"""

results["CV"]["POLAR"] = """0.6453188465218732"""

results["CV"]["DELTA"] = """0.6840310687510874"""

results["CV"]["GROUND"] = """0.631561081984251"""

pd.DataFrame(results)

results["BIC"]["GROUND_NORMAL"] = """0.601123595505618"""

results["BIC"]["POLAR"] = """0.550561797752809"""

results["BIC"]["DELTA"] = """0.6235955056179775"""

print("WER Table")

df = pd.DataFrame(results)

df

Question 3: Summarize the error results from three combinations of features and model selectors. What was the "best" combination and why? What additional information might we use to improve our WER? For more insight on improving WER, take a look at the introduction to Part 4.

Answer 3:

As we see in our WER table above, the SelectorDIC model selector with ground_normal feature set has proven to have the least word error rate on our final test with just under 53% WER. Given how different people are, difference in height, arm span, and other inconsistencies, I have chosen to use ground normal feature set, which measures the distance from 'ground' which in our case is the nose, and then normalized to bring all those numbers closer together.

In order to improve our WER, we have a few options. We could gather more data and train on it. This would allow more cross-validation options , as our chance of over-fitting would increase with the amount of data we train on ( without some sort of validation ).

We could also switch to a n-gram model ( such as bi-gram or tri-gram ). This process involves utilizing the fact the words often appear in sequences, and you can get much better probability by predicting sets of 3 words in sequence rather than just 1 word, like the 0 gram approach we have taken above. You could even extend this to 11-gram sequences, but research has been done that most of the english language can work by breaking it up into smaller pieces.

We have made great steps in our ASL Recognizer, but still have far way to go to get to some usable prediction rates. The n-gram approach looks the most promising, and combined with getting more data to train on could yield some pretty powerful results.

Recognizer Unit Tests¶

Run the following unit tests as a sanity check on the defined recognizer. The test simply looks for some valid values but is not exhaustive. However, the project should not be submitted if these tests don't pass.

from asl_test_recognizer import TestRecognize

suite = unittest.TestLoader().loadTestsFromModule(TestRecognize())

unittest.TextTestRunner().run(suite)

PART 4: (OPTIONAL) Improve the WER with Language Models¶

We've squeezed just about as much as we can out of the model and still only get about 50% of the words right! Surely we can do better than that. Probability to the rescue again in the form of statistical language models (SLM). The basic idea is that each word has some probability of occurrence within the set, and some probability that it is adjacent to specific other words. We can use that additional information to make better choices.

Additional reading and resources¶

- Introduction to N-grams (Stanford Jurafsky slides)

- Speech Recognition Techniques for a Sign Language Recognition System, Philippe Dreuw et al see the improved results of applying LM on this data!

- SLM data for this ASL dataset

Optional challenge¶

The recognizer you implemented in Part 3 is equivalent to a "0-gram" SLM. Improve the WER with the SLM data provided with the data set in the link above using "1-gram", "2-gram", and/or "3-gram" statistics. The probabilities data you've already calculated will be useful and can be turned into a pandas DataFrame if desired (see next cell).

Good luck! Share your results with the class!

# create a DataFrame of log likelihoods for the test word items

df_probs = pd.DataFrame(data=probabilities)

df_probs.head()